Troubleshooting#

Here are some ‘common’ errors that you might met at some point:

Issue with X forwarding#

If you forget to request X forwarding (graphic forwarding) when connecting you will end up with errors looking at

qt.qpa.xcb: could not connect to display

qt.qpa.plugin: Could not load the Qt platform plugin "xcb" in "" even though it was found.

This application failed to start because no Qt platform plugin could be initialized. Reinstalling the application may fix this problem.

Available platform plugins are: eglfs, linuxfb, minimal, minimalegl, offscreen, vnc, wayland-egl, wayland, wayland-xcomposite-egl, wayland-xcomposite-glx, xcb.

Aborted (core dumped)

As a reminder to connect:

to ‘slurm-cluster’

ssh -XC {user}@cluster-access

when doing a salloc to slurm use the -x11 option like

salloc --partition gpu --x11 --gres=gpu:1 --mem=256G srun --pty bash -l

Issues With Qt (Application crashes with segfault)#

the tomwer GUI is using silx from the silx.gui.qt module which try to use PyQt5, then PySide2, then PyQt4 Order is different for AnyQt, used by Orange3. So some incoherence might append (will bring errors) in the Signal/SLOT connection during widget instanciation.

You should be carrefull about it if more than one Qt binding is available (one quick and durty fix is to change the AnyQt/__init__.py file - ‘availableapi’ function for example)

Issue with silx library and static TLS#

It is know that with some os (debian8) we can that issues with openmp. Usually we compile silx with the –no-openmp flag (as the distributed wheel). Otherwise this kind of error can occur (silx-kit/silx#3102). At tomwer side the only usage we can have of openmp is with the median filter. So it is not consider a ‘big’ deal if openmp is deactivate.

Issue with reconstruction being slow#

If the reconstruction is done on a GPU and appears to be very slow (several minutes) the origin can be that the scan dataset is store into several files. Like for example for HDF5 file if each frame is store in a dedicated file.

Issue with the ‘datalistener’#

If the data listener is started and no acquisition / scan is found then there is two possible issues:

the tomo-sync from EBS-tomo is not activated. You can check these from bliss multivisor

the configuration of EBS-tomo which is not sending the rpc-command to the computer actually running tomwer. As the configuration seems to evolve and there is no ‘rule’ for now please check with bcu.

today the latest data-listener is taking the end on the ‘targetted port’. Previously ( version < 1.0) user had to do this manually using the tomwer stop-data-listener command. This can still fail if the user lanching tomwer has no right to stop the ‘owning port’ process.

slice not being reconstructed / taking much time#

A common trouble is that the slice is not reconstructed or taking much time (on recent nabu version > 2023). This happen when the user tries to reconstruct a slice without having a GPU.

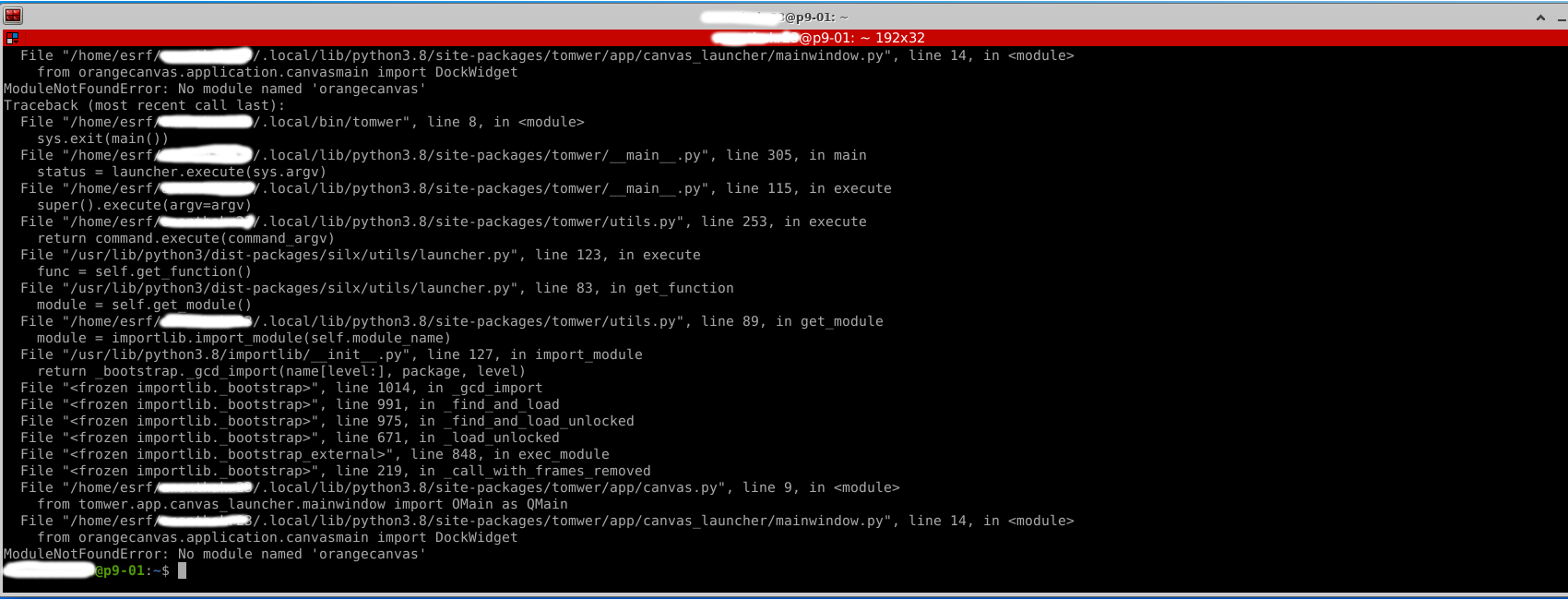

Issue some python library not found#

It can happen that a user has install tomwer locally and then does a ‘module load tomotools’. Then this will trigger the local installation. And if the local installation has been done withtout the ‘pip install tomwer [full]’ then you can get for example the following error:

Workflow processing#

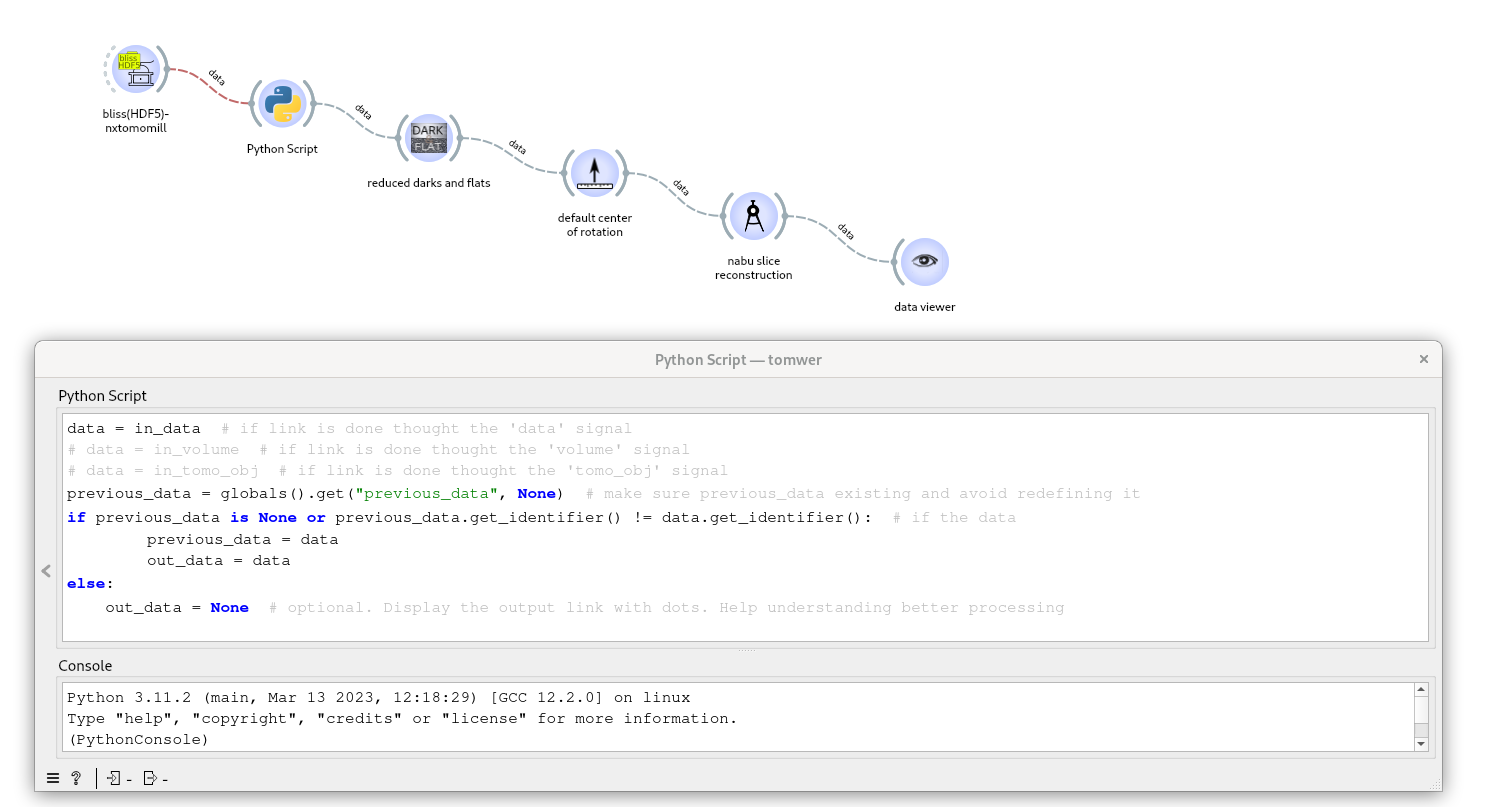

Issue with a widget processing several time the same dataset#

We already had some troubles of a widget sending several time the same ‘data’ / ‘volume’ at the end of the processing. So we end up by triggering twice (or more) the downstream processing.

This is clearly a bug. So if you encounter it please contact us and / or create an issue about it: https://gitlab.esrf.fr/tomotools/tomwer/-/issues

One way to bypass it is to add a small python script after the widget emitting several time the signal. This script prevent from sending twice in a row the same signal. One side effect is that the ‘’.

data = in_data # if link is done thought the 'data' signal

# data = in_volume # if link is done thought the 'volume' signal

# data = in_tomo_obj # if link is done thought the 'tomo_obj' signal

previous_data = globals().get("previous_data", None) # make sure previous_data existing and avoid redefining it

if previous_data is None or previous_data.get_identifier() != data.get_identifier(): # if the data has not been send last iteration

previous_data = data

out_data = data

else:

out_data = None # optional. Display the output link with dots. Help understanding better processing

For example if the “bliss (HDF5) - nxtomomill)” send twice a dataset to filter it you can add this script to the workflow as:

Workflow processing#